Introduction

Azure StorageAccount is Microsoft solution to regroup its offerings for storage solution in the cloud. There are four types of solutions:

- Blob : objects storage, similar to AWS s3

- File : file share in the cloud

- Table : NoSQL in the cloud

- Queue : messages queue

“Wait, similar to AWS s3? Do I need to worry about the infamous public bucket then?!?”. Yes, you should. Attackers have been crawling for public containers using tools such as MicroBurst or even websites automating this another step, like GreyHatWarfare which lists both public AWS s3 and Azure Blobs.

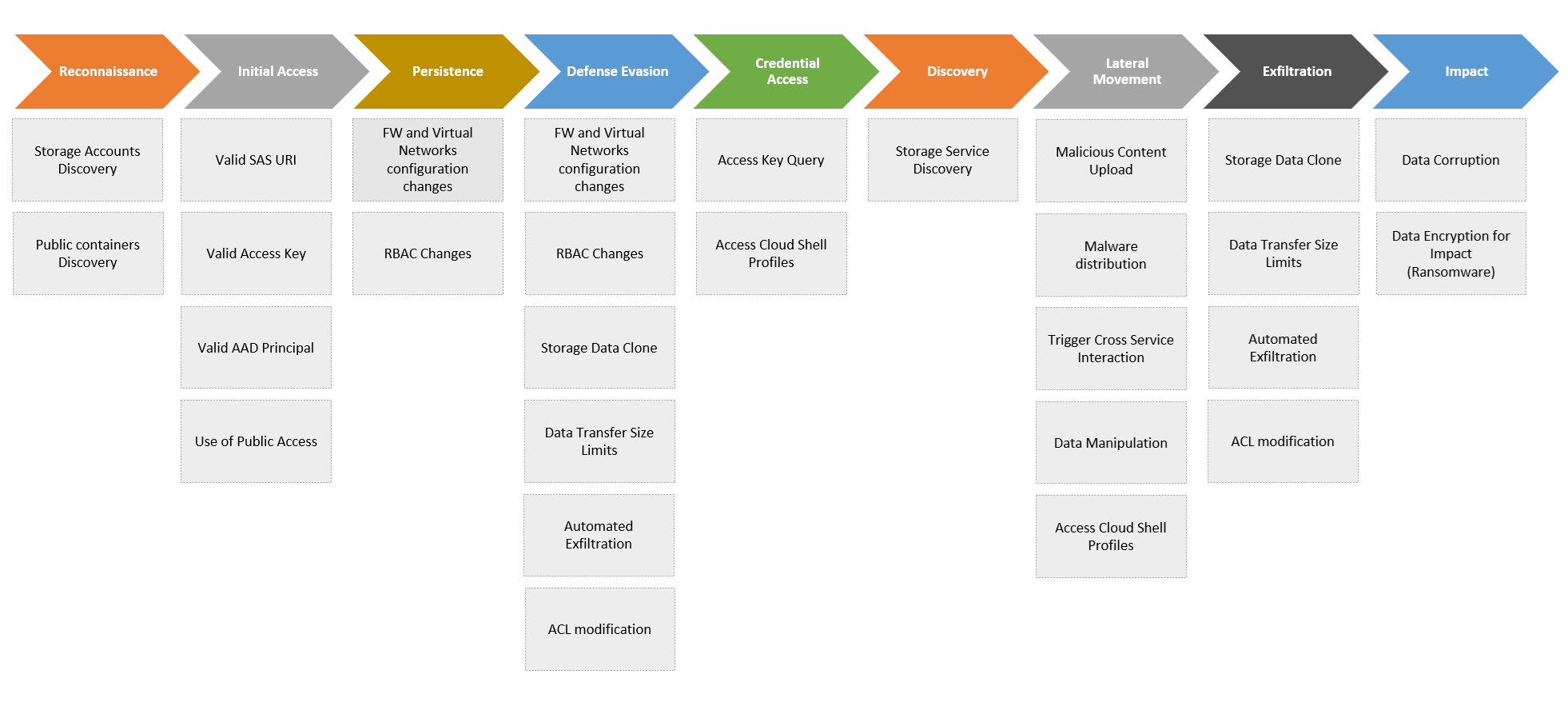

Microsoft recently published its threat matrix for storage services based on MITRE ATT&CK framework. They already performed a similar work for Kubernetes to be used on their AKS offering.

Blob Storage access control

Access level

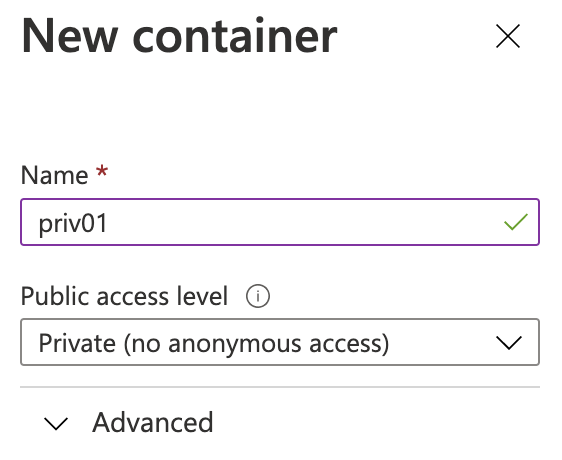

Blob storage access level can be defined to three values:

- Private: well, private

- Blob: anonymous access but requires the attacker to know the full path of the file, not only the container name (URLs in the format

https://<storage account name>.blob.core.windows.net/<blob name>/<file path/name>) - Container: anonymous and you can list the content of a container

Microsoft introduced a guard at the Storage Account level a while ago. You can prevent that all blobs created within a given Storage Account are allowed to be set as public.

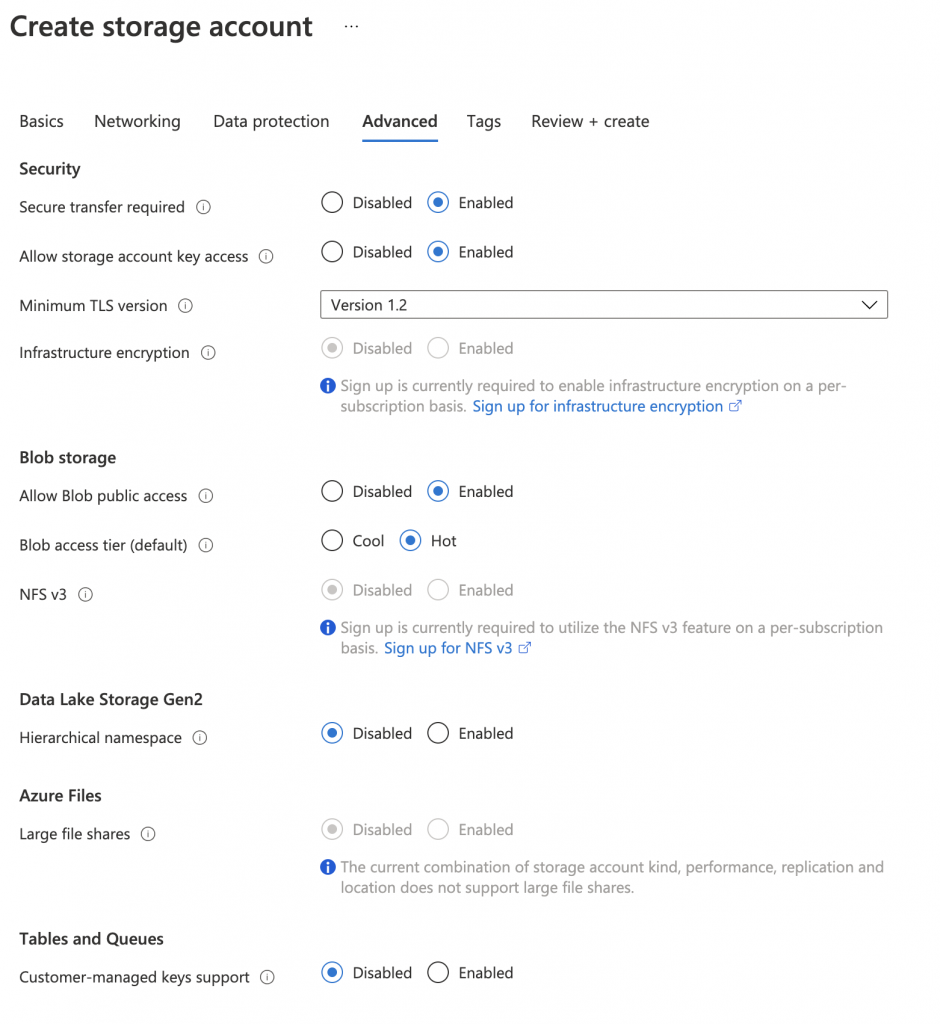

Unfortunately, the default when creating a Storage Account is to Allow public access : enabled…

Yet, when you create blob within Storage Account where public access is allowed, the default is to create them as private.

Shared Access Signature (SAS)

Another control using SAS can be put in place to provide public access to a private container. The following set of signed variables are then appended to the URI of the container to provide a set of privileges while accessing the resources:

- Time: set a validity period for the signed URI by setting a start (optional) and end (mandatory) date

- IP: source IP addresses that are allowed to use the SAS

- Permissions: define the privileges allowed by using this token (read, write, list, …)

- Access policy: bind an access policy to the token instead of direct permissions

- Protocol: limit usage of SAS to HTTPS connections only

Stored Access policy

An Access policy defines permissions just like you can assign via a SAS token, but has the following advantages:

- Use same access policy for multiple SAS tokens

- Generate SAS token without specifying the permissions (ie. limit the risk of errors)

Azure Firewall

Another access control, at the network layer this time, is to use Azure Firewall in order to limit the IP addresses that are allowed to access you Storage Account.

Prevention

Microsoft recommendation is to prevent the creation of public blob storage unless you really want to. That’s nice but in practice you might have to use those blobs from a public facing website.

Architecture

If you require to have such public blob storage within the same resource group, the recommendation is to create dedicated Storage Accounts for those needs. You will then have them with Allow public access set to enabled while the Storage Accounts that need to contain private blob storage will have this option disabled.

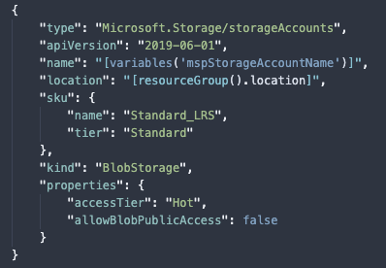

ARM template instead of the UI

Another best practice in cloud environments is to leverage infrastructure as code using solutions like Terraform or ARM templates in the case of Azure. In this case, if you don’t need any public blob storage, you could hard-code in your templates that blob storage should be created with allowBlobPublicAccess set to false.

You could then use something like InSpec to validate that deployed blob storage are indeed conforming to the Allow public access : disabled requirements. Semgrep is supporting json files so technically, you could also write a validator using this tool for ARM templates 🙂

Azure Policy

Now, the most Azure way of preventing the creation of public blob storages is to assign the policy that disallow them! This is still marked as in preview but working so far.

In this scenario, you could apply this policy to all your subscriptions and exclude the required resource groups once you validated that this does not bring a data leakage risk.

Azure CDN

I tested another method allowing public access to a given blob storage that would still allow to disallow public access at the storage account level: leveraging Microsoft.Azure.Cdn service principal. This is something that works for AWS s3 where you could give access to a bucket to the CloudFront service so that only accesses through it are allowed.

However, this didn’t work as expected and I still don’t know if I missed a step, or this is simply not feasible. I opened an issue to keep track of it should you have feedbacks to share.

Detection

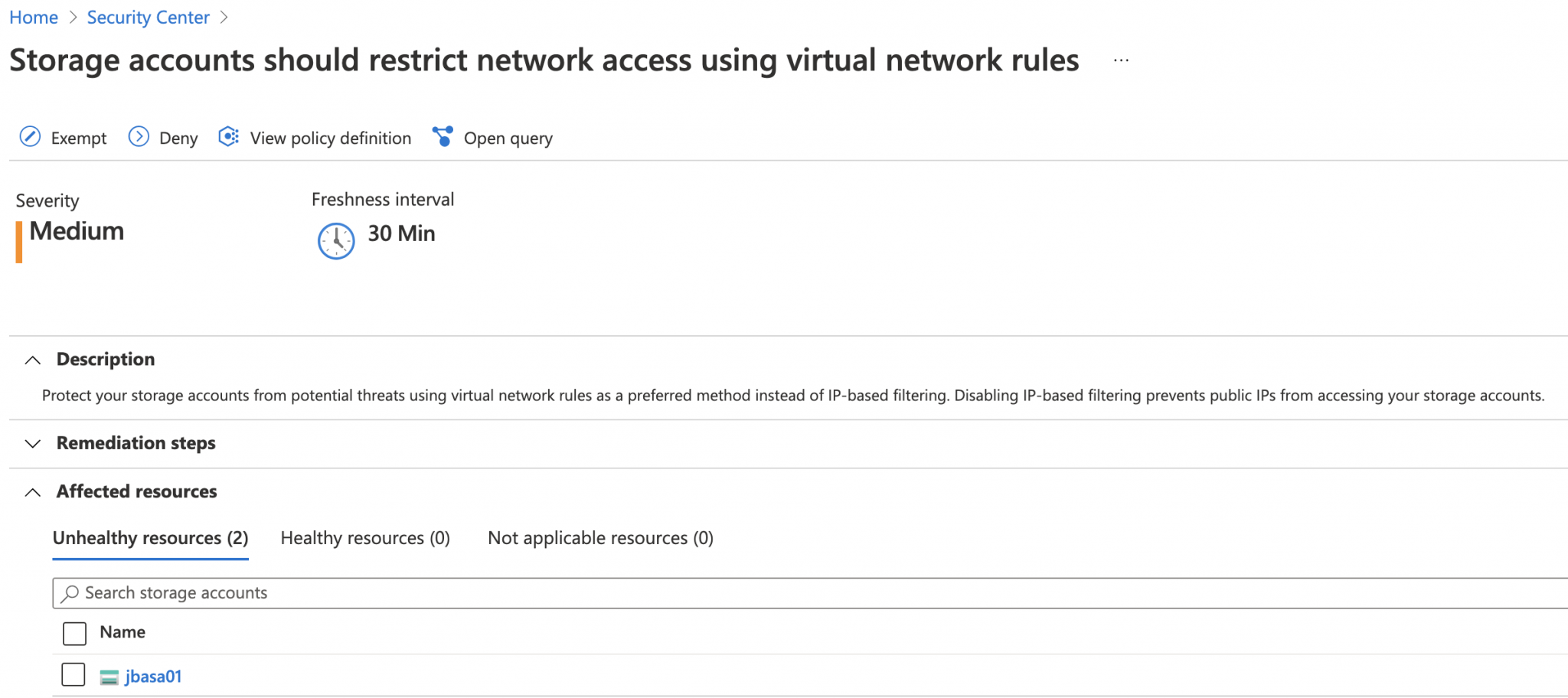

Policy in audit mode

If you cannot block the creation of Storage Accounts with public access disabled, you can assign the corresponding policy in audit mode to have an alert whenever such a Storage Account would be created (or reconfigured).

It is unfortunately not possible to audit at the container level for now. Writing a policy to detect the publicAccess property of a container set to Blob or Container is not yet supported by Azure Policy.

You can then use KQL queries like the following to start building your alerts in Sentinel.

AzureActivity

| where Category == 'Policy' and Level != 'Informational'

| extend p=todynamic(Properties)

| where p.isComplianceCheck == "False"

| extend policies=todynamic(tostring(p.policies))

| mvexpand policy = policies

| where policy.policyDefinitionReferenceId == "StorageDisallowPublicAccess"

| project TimeGenerated, ResourceGroup, Resource, policy

Diagnostic setting

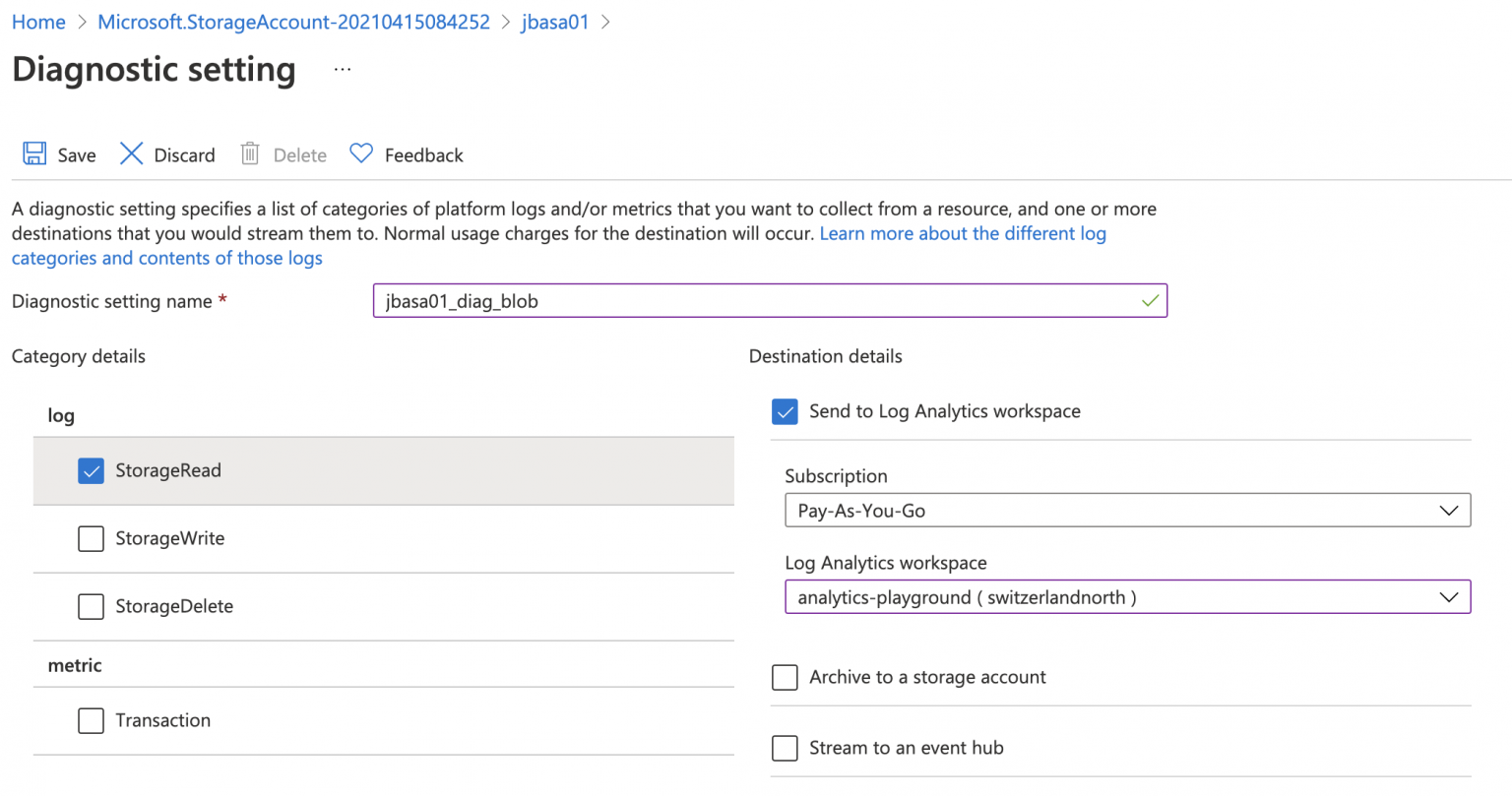

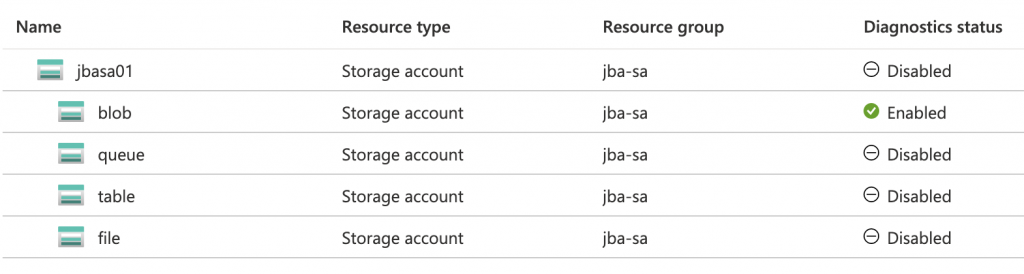

In case one of your administrators deploy a public blob storage and you have a potential data leak, you would like to know if data was accessed and for how long (typical questions we have in such investigation cases).

In order to retrieve access logs for a blob storage, you can now create a Diagnostic Setting to log StorageRead accesses (among others) and forward those events to a Log Analytics workspace used by Sentinel for example.

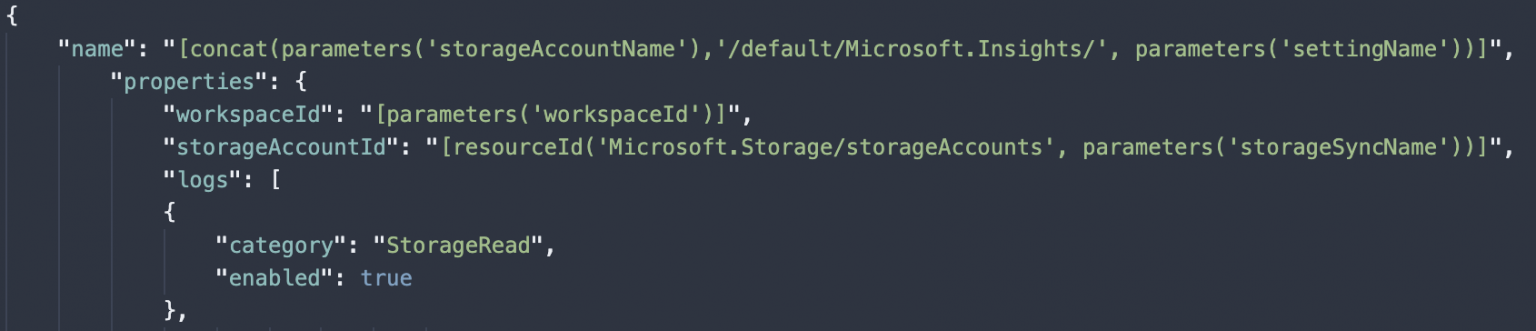

This can also be defined in ARM templates by using the metrics properties to the following:

This is fully documented by Microsoft here.

A new feature also recently entered preview mode: automatically enable this setting through an Azure Policy assignment. This is a great way to ensure that newly created resources (also in preview: Key Vault, AKS, SQL Databases) are on-boarded into Azure Sentinel.

Once setup (and enabled, remember, this is not automatic in cloud environments ;)) you can use KQL queries such as the basic following one to list public accesses to monitored Storage Account.

let watchlist = dynamic(["jbasa01"]);

StorageBlobLogs

| where Category == "StorageRead" and StatusCode == "200" and AuthenticationType == "Anonymous"

| where watchlist contains AccountName

Depending of the usage of your Storage Account, you could also build a query looking for access in the historical data and check the UserAgentHeader field for value not seen in the previous days, like in this query for SharePoint activities.

Microsoft also started to provide hunting queries for StorageBlobLogs on its GitHub repository. Follow this space as for sure it will be contributed to either by Microsoft or the growing community.

Defender for Storage

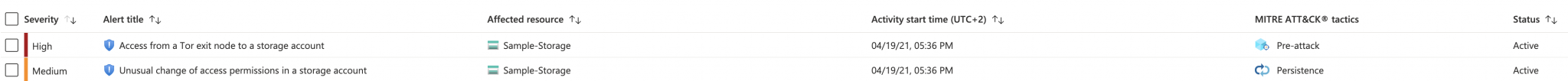

Obviously, Microsoft got you covered in Defender for Storage, should you enable it (recommended but added cost :)). Once enabled for your Storage Accounts, you could use alerts such as:

- Detect accesses from known suspicious IP (ex. Tor exit nodes)

- Detect anomalous behavior, ie. changes in the pattern of accesses for resources

The full list of Defender for Storage alerts is listed here.

You can then derive KQL queries from the following base to create alerts in Sentinel.

SecurityAlert

| where ProductName == "Azure Security Center"

| where AlertType contains "Storage"